12 Jul 2020

As the IETF nears completion of the QUIC version 1 specification, we see renewed interest in extensions such as “forward error correction” or “unreliable streams”. In both cases, the goal is to mitigate the effect of packet losses, either by using redundancy to correct them, or by delivering the data to the application immediately to reduce latency. But before we devise such schemes, I think we should try to characterize the packet losses that we observe on QUIC connections. I tried to do that using the standardized QUIC logs (QLOG) provided by many implementations of QUIC. The early result is interesting: most observed packet losses seem to happen in batches, probably caused by “slow start” ramp up of competing traffic.

Many implementations of QUIC deploy test servers to enable interoperability tests during the development of the IETF standard. Several of these provide access to logs in the QLOG format. Two research implementations, Quant and AIORTC, make those logs publicly available. I took advantage of that and downloaded series of logs from these sites, and then wrote a Python script to analyze the “packet loss” events in these logs. I found three types of losses: pre-handshake, congestion induced, and others.

The pre-handshake losses are those happening before the QUIC handshake completes. In the script, I define “pre handshake” as “before each end of the connection has sent at least one 1RTT packet”, i.e., before the two peers are engaged in data exchange. The pre-handshake losses constitute the bulk of the observed losses in short connections. This is easy to explain. The test servers are used for interoperability. Packets rejected by receivers because of interoperability issues appear lost. The traces also show some amount of “spurious losses” during handshake, due to too short values for the initial timer. In any case, pre-handshake losses tell us more about implementations than about network conditions.

Congestion losses happen when the sending rate exceeds the capacity of the path. In the traces, these losses are mostly found during the “slow start” phase of the connection. In the classic slow-start algorithm, the sender doubles the congestion window every RTT until some loss occurs. The traces show that these end-of-slow-start losses often happen in big batches. In the script, we characterize congestion losses are those happening when the measured round-trip times (RTT) approach the maximum RTT observed for the whole connection. Observing these losses tell us more about the ramp-up strategy of the implementation than about network condition.

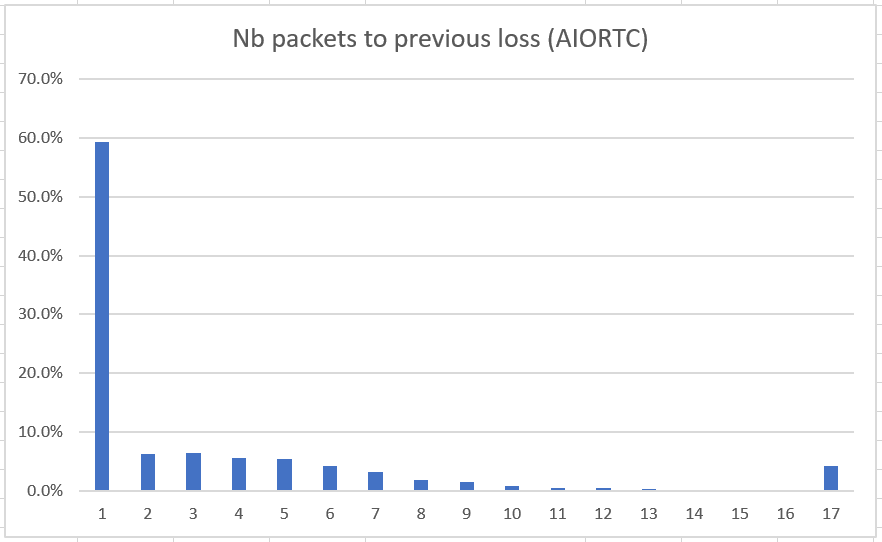

The script focuses then on the “other” losses, those that occur after the handshake and outside of congestion periods. The goal was to try assessing the packet loss regimen expected for long connections, such as for example video conference sessions. Several connections in the logs document transmission of thousands of packets, giving us some data on this loss regimen. The script measures the number of losses, and then counts the number of packets since the previous loss.

The histogram above shows that for the long connections (>1000 packets) in the AIORTC samples, most of the losses come in batches. The overall rate of “other” losses was about 1.4%, but in almost 60% of the loss events describing the loss of packet number N, the packet number N-1 was also lost. In almost 96% of the cases, at least one of the packets between N-1 and N-16 was lost. Instead of looking at packet losses occurring at a rate of 1.4%, it seems more accurate to speak of loss events happening at a rate of 0.1%, with each event triggering a batch of consecutive losses.

These results are of course preliminary, but if confirmed they have a big impact on the design of error mitigation strategies like FEC or unreliable delivery. FEC design is much harder if losses happen in big batches than if they just happened randomly in an uncorrelated manner. If large number of packets are lost, unreliable delivery of the remaining packets will not always enable the application to continue processing a video stream. New research is probably needed.

We can also wonder why we are seeing these loss events. I suspect that these are induced by competing connections that are ramping up their data rates using the slow-start algorithm. The competing connections create a congestion event, which causes packet losses not only for the connection that is ramping up but also for other connections sharing the same bottleneck. Driving down the frequency of these events would require concerted efforts. We can imagine deploying queue management at bottlenecks that insulate the competing connections or deploying better ramp-up algorithms in end-to-end transports. If the experience of reducing buffer-bloat is a guide, that could take time.

This kind of work requires access to traces. Big companies like Google or Facebook have access to a vast number of traces through their telemetry systems, but independent researchers must make do with much fewer data. It would be nice if multiple implementations could share sets of QLOG traces, maybe through some kind of QUIC observatory. I might try to set that up.