The list of past posts including those previously published on Wordpress is available here.

Our new congestion control algorithm, C4, is designed to work well in difficult network conditions, and in particular on Wi-Fi networks exhibiting high delay jitter. We noticed the “Wi-Fi jitter” issue two years ago (see the weird case of Wi-Fi latency spikes). We would see sudden jumps in measured RTT, from 1 or 2 milliseconds to 50 tor even 200ms. Since that, we have been looking for explanations of these observation. Our early hypotheses were that the whole Wi-Fi operation was “suspended” for short intervals, either to allow the Wi-Fi device to explore alternative radio frequencies, or perhaps to comply with regulations requiring that Wi-Fi yields to higher priority radio applications like radars at airports. Analyzing more recent traces allowed us to make progress, and explain the phenomenon without the “suspension” hypothesis.

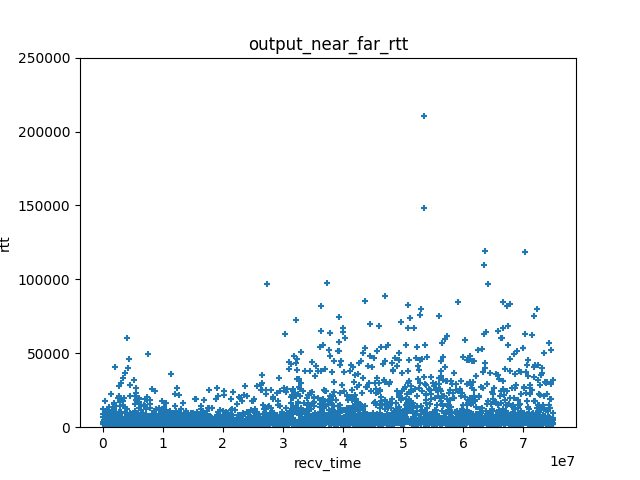

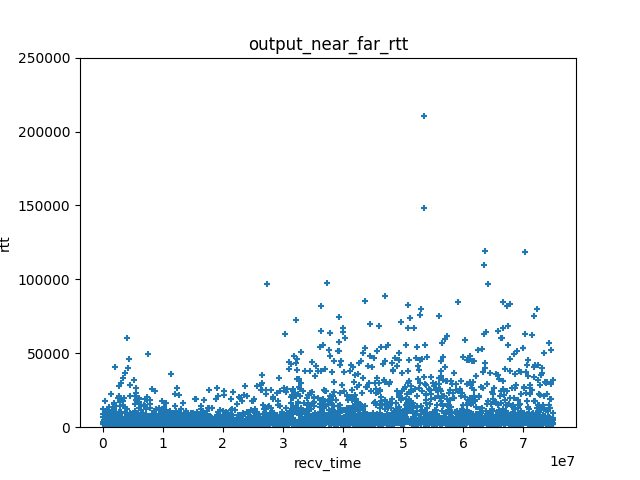

The graph above shows the evolution of RTT over a 75 second connection. It was captured using an ad hoc tool, octoping, that sent udp packets from a client to a responder and recorded the time at which an echo of these packets arrived. The session starts at a point where the transmission conditions are relatively good, before the device moves to a location where Wi-Fi conditions are very degraded and cause numerous delay spikes.

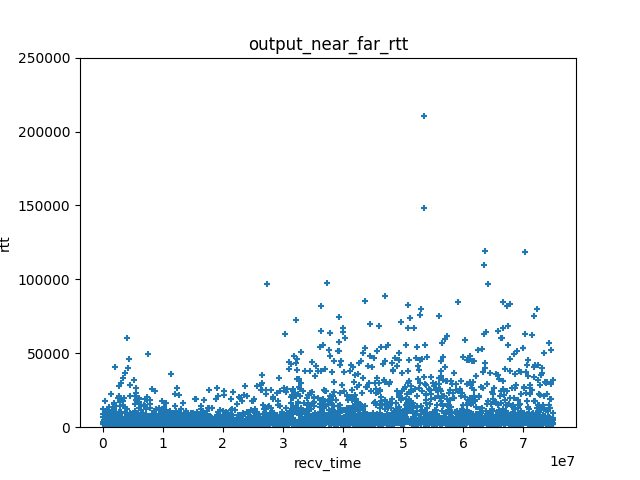

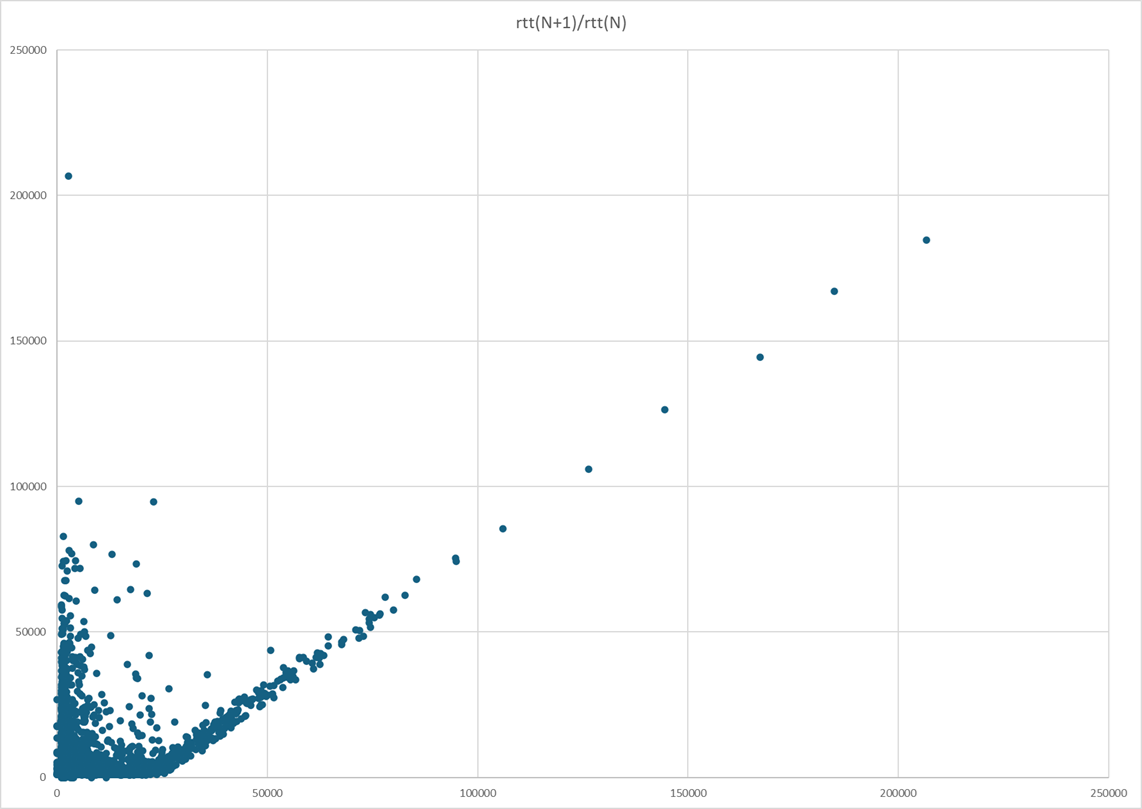

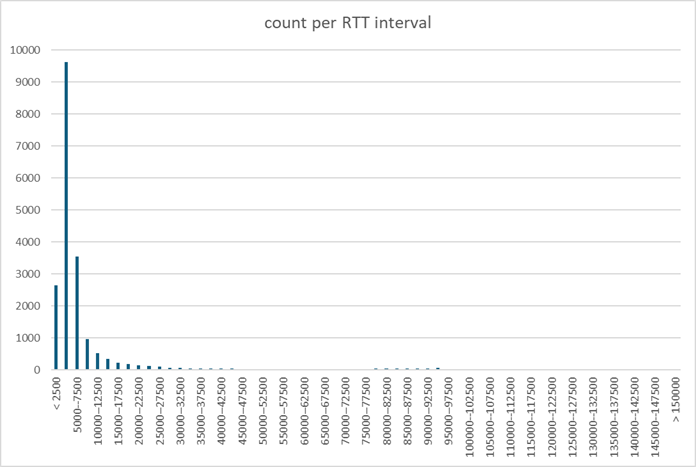

The histogram shows that most measured RTT are small, but that the distribution of RTT has a very long tail, and some kind of exponential distribution.

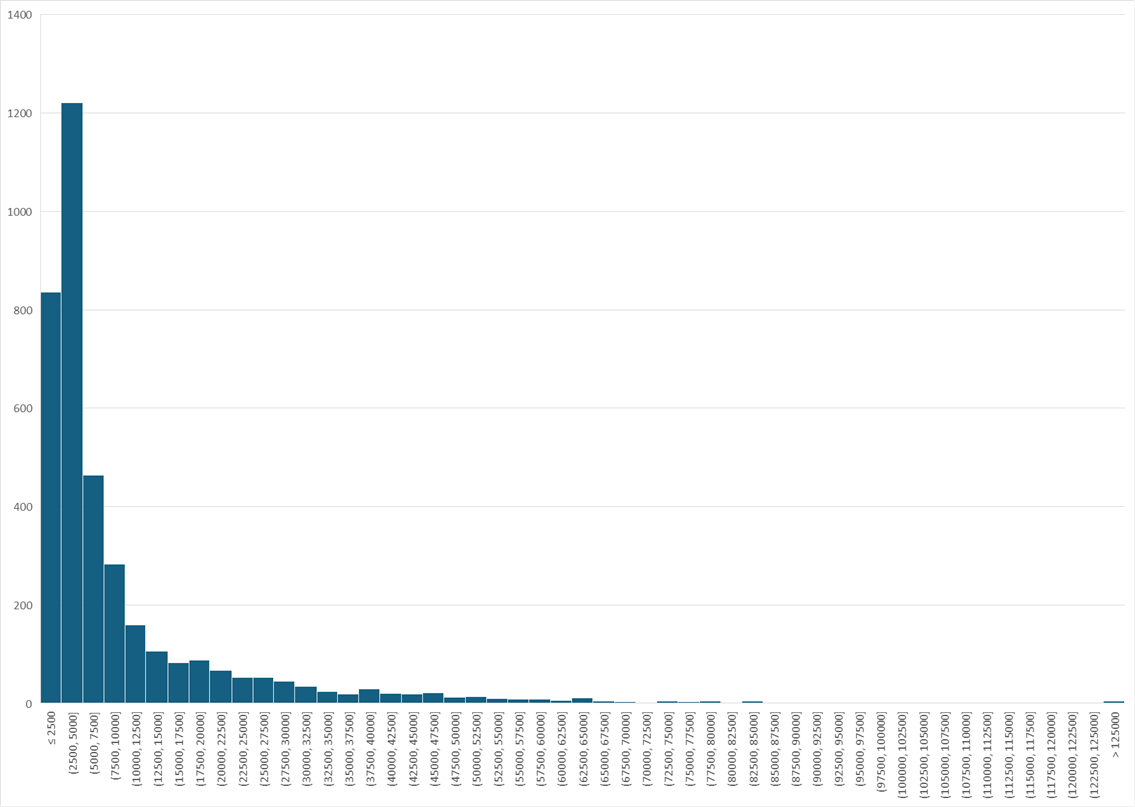

Looking further, we plotted the observed RTT as a function of the next RTT. That graph has a very distinctive shape, and a very interesting feature, with lots of high value samples clustered around a parallel to the diagonal that crosses the x-axis near the 20 millisecond mark. The rightmost points on that line are spaced 20ms apart. We know that the octoping program sent packets at 20ms intervals. What we see here is a consequence of in order delivery. If the first packet encountered a long RTT, the packet after that, sent 20ms after it, arrives at almost the same time, as if it was retained by some Wi-Fi component until it could be delivered in sequence.

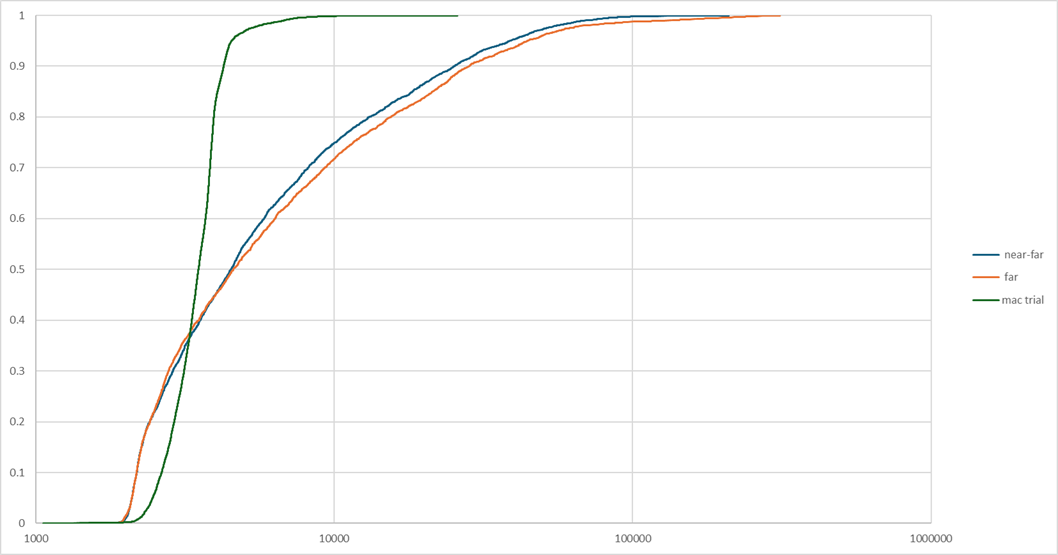

Of course, we don’t want to draw conclusions from just one sample. We have collected multiple such samples, and we do see variations between them, such as the difference showing between in cumulative frequency distributions of observed RTT in three different trials. One of this trials was done in good conditions, and it markedly differs from the other two, with the CFD showing most values clustered around the median. The other two are similar, with a much wider distribution of observed RTT, one being somewhat worse than the other.

To try get a better understanding, we took all the samples. We filtered out values that only differed from the previous observation by the interval between two probes, because in those cases the delays result from in sequence delivery rather that a random process. Then we draw the histogram of all observed values… and it does not tell us much. The leftmost part of the histogram looks like some kind of Gaussian or Poisson process, but the tail is a bit large.

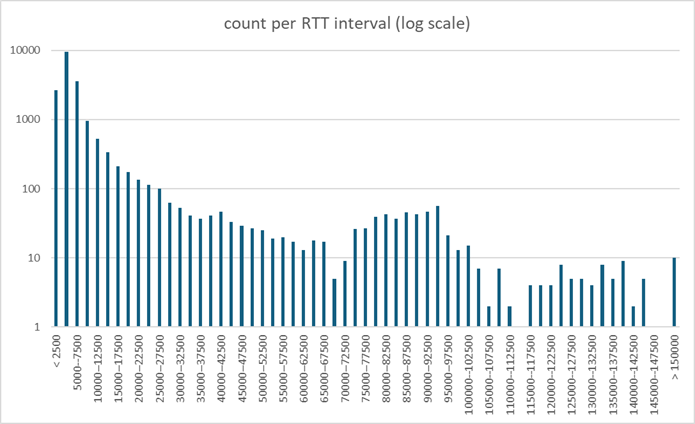

The graph that we found most informative takes the same RTT values per interval, and plots then in a log scale. We seem to have a complex process, combining a short term random process of some sort, and a secondary process that produces very large values, albeit at a low frequency.

We don’t really know what caused this, but we can think of two Wi-Fi processes, both figuring exponential backoff. The CDMA process enables multiple stations managed by different access points to share the same channel. In case of collision, they will retry at a random time picked in an interval that doubles after each collision. The CDMA collisions are generally resolved in a few milliseconds. We believe that CDMA collisions explain the left most part of the curve.

The “bumps” in the right side of the curve have to be explained by a different process that also involves exponential backoff and probably operates with a granularity measured in tens of milliseconds. This could well be due to the “frame retransmission” process that Wi-Fi device uses for the “best effort” channel. If the packet is lost, it is resent after an interval. In case of repeated losses, the repeat interval increases exponentially.

This packet repeat process is not present all the time – for example, we do not see it in the “good” trial in our graph of CFD in three different trials. We see it a lot in the “bad” samples. They were captured in a multi-appartment building. It is very likely that multiple Wi-Fi access points were operating inthat building, causing some packet collisions due to the “hidden terminal” problem.

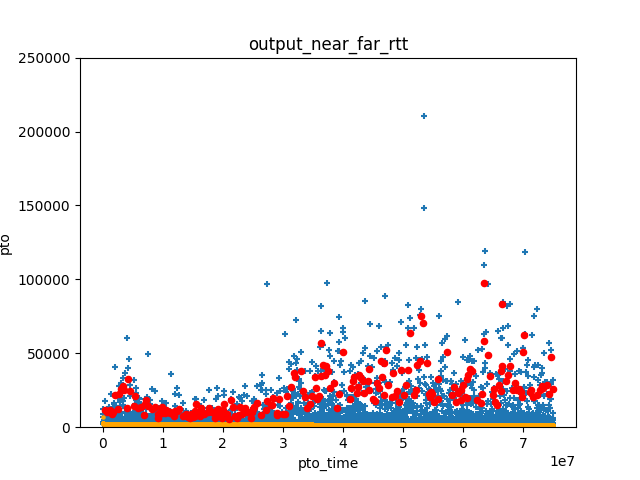

Whatever the actual cause, we do observe some pretty extreme values of RTT jitter in some Wi-Fi networks. Our first observation is that this jitter is not properly modelled by the algorithm in TCP or QUIC. For example, for QUIC, the algorithm defined in RFC 9002 compute a “smoothed RTT” by performing a exponential smoothing of RTT samples, and a “PTO” timer as the sum of the smoothed RTT and four times the estimated “RTT variance”. We programmed these formulas, and computed the PTO timer for the various traces that we collected. This timer will “fire” when the next acknowledgment does not arrive before its expiration. In the graph, we show the effect for our “near far” trial. Each blue “+” sign correspond to one RTT sample. Each red dot correspond to a firing of the PTO timer. That’s a lot of firings!

In practice, the first effect of these spurious PTO timers will be to mislead the “delay based” algorithms such as Hystart, causing an early exit of the “slow start” phase that is hard for algorithms like Cubic to compensate. We first noticed this problem in 2019 when testing Cubic for QUIC (see Implementing Cubic congestion control in Quic). It is interesting that this blog includes a graph of observed delays very similar to the WiFi trials discussed here, although at the time we focused mostly on the short-scale variations. The low-pass filtering recommended in this blog does solve the short-scale issue, but is defeated by the arrival of very large RTT. After our tests, we fixed our implementation of Cubic to return to slow start if the exit of Hystart was causes by a spurious timer, and that markedly improved the robustness of Cubic.

The second effect is to create large gaps in received packets. Supposed that a packet is delivered with a long latency. All packets sent after it will be queued, and example of “head of line” blocking. This will be perceived by the endpoint as a “suspension”, as we diagnosed in 2023. These gaps will have different effects on different congestion control algorithms. These effects need to be studied. We have thus developed an way to simulate these latencies, so that we can perform tests and simulations of algorithms operating in different Wi-Fi conditions.

We are still not quite sure of the processes causing this latency, but this should not prevent us from doing an useful model that more or less mimics the latency variations of Wi-Fi networks. After all, the models used by Hellenistic astronomers to predict the movement of planets where not exactly based on the formulas later refined by Kepler and then Newton, but their complex combinations of epicycloids was precise enough to build devices like the Antikythera mechanism and help ancient mariners navigate.

In our model, we define the latency by combining several generators, combined using a coefficient x defining how of a secondary long scale repetition is also used:

The latency for a single sample will be:

latency = N1*1ms + N2*7.5ms

if N1 >= 1:

latency -= r

The coefficient x is derived from the target average jitter value. If the target is 1ms or less, we set x to zero. If it is higher than 91ms, we set x to 1. If it is in between, we set:

x = (average_jitter - 1ms)/90ms

We have been using this simulation of jitter to test our implementation of multiple congestion control algorithms, and to guide the design of C4.

For the past 6 months, I have been working on a new congestion control algorithm, called C4 for “Christian’s Congestion Control Code”, together with Suhas Nandakumar and Cullen Jennings at Cisco. Our goal is to design a congestion control algorithm that serves well real time communication applications, and is generally suitable for use with QUIC. This leads to the following priorities:

Out of those three priorities, the support for “questionable” Wi-Fi had a major influence on the design. We got reports of video not working well in some Wi-Fi networks, notably networks in office buildings shared by multiple tenants and appartment buildings. Analyzing traces showed that these networks could exhibit very large delay jitter, as seen in the following graph:

In presence of such jitter, we see transmission sometimes stalling if the congestion window is too small. To avoid that, we have to set the congestion window to be at least as large as the product of the target data rate by the maximum RTT. This leads to our design decision of tracking two main state variables in C4: the “nominal data rate”, which is very similar to the “bottleneck bandwidth” of BBR (see BBR-draft); and the “nominal max RTT” which is set to the largest recently experienced RTT as measured in the absence of congestion.

The support for application limited flows is achieved by keeping the nominal data rate stable in periods of low application traffic, and only reducing it when congestion is actually experienced. C4 keeps the bandwidth stable by adopting a cautious bandwidth probing strategy, so that in most cases probing does not cause applications to send excess data and cause priority inversions.

C4 uses a “sensitivity” curve to obtain fairness between multiple connections. The sensitivity curve computes sensitivity as function of the nominal data rate, ensuring that flows sending at a high data rate are more sensitive than flows using a lower data rate, and thus reduce their data rate faster in response to congestion signals. Our simulations shows that this drives to fair sharing of a bottleneck between C4 flows, and also ensure reasonable sharing when sharing the bottleneck with Cubic or BBR flows.

We have published three IETF drafts to describe the C4 design, C4 algorithm specifications, and the testing of C4. The C4 repository on Github contains our C implementation of the algorithm, designed to work in Picoquic, as well as testing tools and a variety of papers discussing elements of the design.

The code itself is rather simple, less than 1000 lines of C including lots of comments, but we will need more than one blog post to explain the details. Stay tuned, and please don’t hesitate to give us feedback!

This morning, I was contacted by Victor Stewart, who has been contributing to picoquic for a long time. Victor is using the new Codex extension that OpenAI released last week. He was impressed with the results and the amazing gains in productivity.

I started from a very skeptical point of view. I saw initial attempts at using various AI systems to generate code, and they required a rather large amount of prompting to create rather simple code. I have read reports like Death by a thousand slops by Daniel Steinberg, who rails about sloppy bug reports generated by AI. So I asked Victor whether we could do a simple trial, such as fixing a bug.

Victor picked one of the pending bugs in the list of issues, issue #1937. I had analyzed that bug before, but it was not yet fixed. So we tried. While we were chatting over slack, Victor prompted Codex, and got a first reply with an explanation of the bug and a proposed fixed, more or less in real time. I asked whether it could generate a regression test, and sure enough it did just that. Another prompt to solve some formatting issues, and voila, we got a complete PR.

I was impressed. The fix itself is rather simple, but even for simple bugs like that we need to spend time analysing, finding the repro, writing a regression test, and writing a patch. We did all that in a few minutes over a chat. It would probably have required at least a couple hours of busy work. The Codex system requires a monthly subscription fee, and probably spending some time learning the tool and practicing. But when I see this kind of productivity gain, I am very tempted to just buy it!

The Great Firewall Report, a “long-term censorship monitoring platform”, just published an analysis of how the “Great firewall” handles QUIC. I found that a very interesting read.

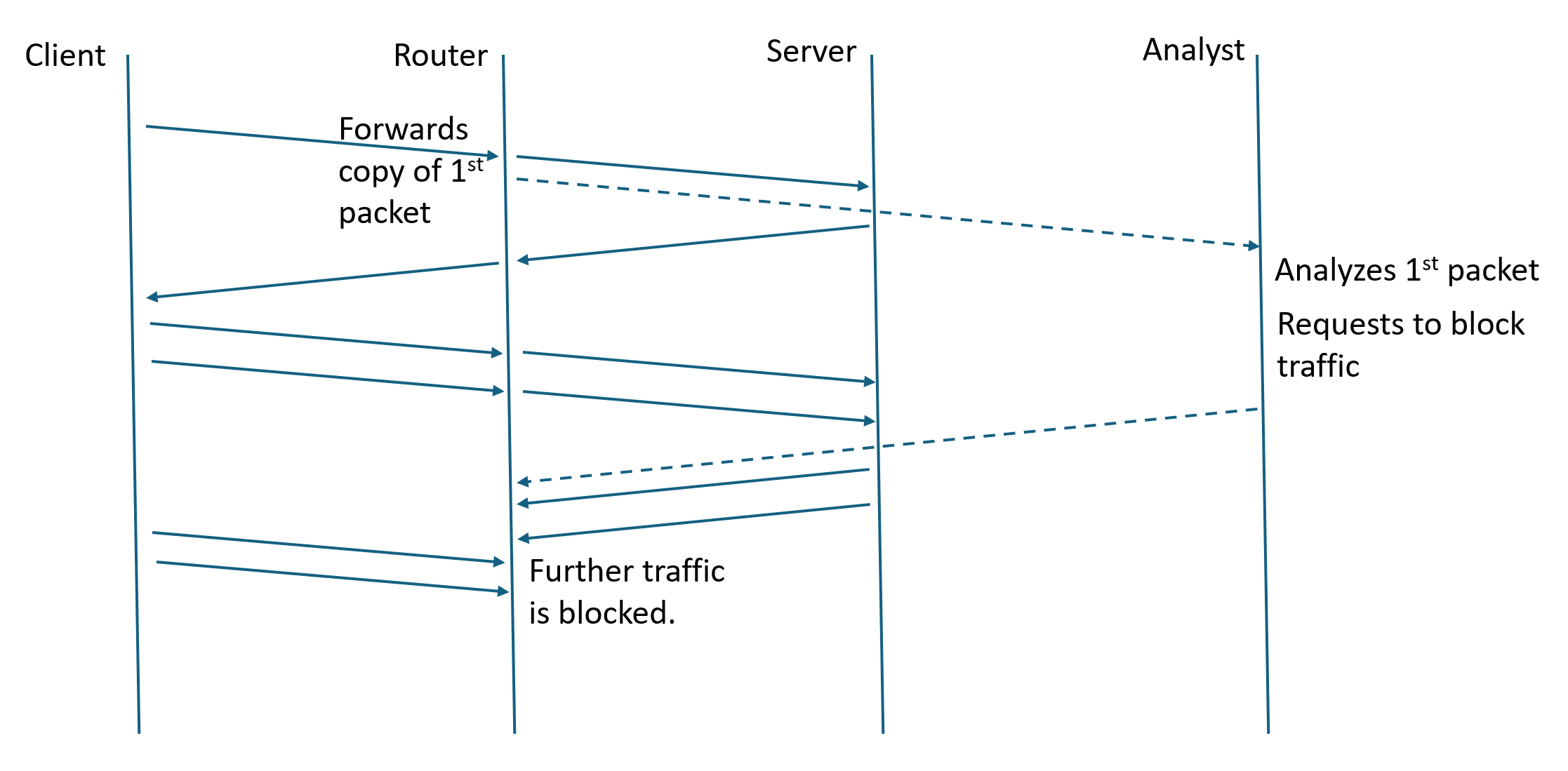

The firewall work is distributed between an analysis center and cooperating routers, presumably at the “internet borders” of the country. The router participation appears simple: monitor incoming UDP flows, as defined by the source and destination IP addresses and ports; capture the first packet of the flow and send it to the analysis center; and, receive and apply commands by the analysis center to block a given flow for 2 minutes. The analysis center performs “deep packet inspection”, looks at the “server name” parameter (SNI), and if that name is on a block list, sends the blocking command to the router.

The paper finds a number of weaknesses in the way the analysis is implemented, and suggests a set of techniques for evading the blockage. For example, clients could send a sacrificial packet full of random noise before sending the first QUIC packet, or they could split the content of the client first fly across several initial packets. These techniques will probably work today, but to me they feel like another of the “cat and mouse” game that gets plaid censors and their opponents. If the censors are willing to dedicate more resource to the problem, they could for example get copies of more than one of the first packets in a stream and perform some smarter analysis.

The client should of course use Encrypted Client Hello (ECH) to hide the name of the server, and thus escape the blockage. The IETF has spent 7 years designing ECH, aiming at exactly the “firewall censorship” scenario. In theory, it is good enough. Many clients have started using “ECH greasing”, sending a fake ECH parameter in the first packet even if they are not in fact hiding the SNI. (Picoquic supports ECH and greasing.) ECH greasing should prevent the firewall from merely “blocking ECH”.

On the other hand, censors will probably try to keep censoring. When the client uses ECH, the “real” SNI is hidden in an encrypted part of the ECH parameter, but there is still an “outer” SNI visible to observers. ECH requires that client fills this “outer” SNI parameter with the value specified by the fronting server in their DNS HTTPS record. We heard reports that some firewalls would just collect these “fronting server SNI values” and add them to their block list. They would block all servers that are fronted by the same server as a one of their blocking targets. Another “cat and mouse” game, probably.

The IETF draft defining multiparty extension for QUIC is almost ready, but there was an interesting debate during the IETF meeting in Bangkok about its status. One of the reasons is that we have only a few implementations, and not all that much deployment in production. The other reason is that congestion control for multiparty is still somewhat experimental. The draft is carefully worded to only use stable references, merely following RFC9000 and stating that there should be an independent congestion controller for each path, meaning that a QUIC multiparty connection with N paths will be equivalent to as many single path connections. Which seems reasonable, but is in fact a bit of a low bar. For example, in RFC6356, we see three more ambitious goals:

Goal 1 (Improve Throughput) A multipath flow should perform at least as well as a single path flow would on the best of the paths available to it.

Goal 2 (Do no harm) A multipath flow should not take up more capacity from any of the resources shared by its different paths than if it were a single flow using only one of these paths. This guarantees it will not unduly harm other flows.

Goal 3 (Balance congestion) A multipath flow should move as much traffic as possible off its most congested paths, subject to meeting the first two goals.

These goals are implicitly adopted in the latest specification for Multipath TCP in RFC8684.

The QUIC extension for multipath only mention a subset of these goals, stating that Congestion Control must be per-path, as specified in RFC9000. The guiding principle is the same as the Goal 2 of RFC6356, ensuring that a QUIC multipath connection using multiple paths will not use more resource than a set of independent QUIC connections running on each of these paths.

We could have a philosophical discussion about what constitute “fairness” in multipath. On one hand, we could consider that a connection between two endpoints A and B should be “fair” versus any other connections between these points. If it can use Wi-Fi and 5G at the same time, that means it should not be using more resource that the best of Wi-Fi and 5G. On the other hand, users can argue that they are paying for access to both networks, and should be able to use the sum of the resource provided by these two networks. The first approach requires users to be cooperative, the second accepts that users will be selfish. In practice, it is probably better to engineer the network as if users would be selfish – because indeed they will be. But even if we do accept selfishness, we still have at least two congestion control issues, such as shared bottleneck and simultaneous fallback.

Suppose that a QUIC congestion uses two paths, defined by two set of source and destination IP addresses. In practice, QUIC paths are initiated by clients, and the two paths are likely to use two different client IP addresses, but a single server address. We could easily find configuration in which the server side connection is the bottleneck. In that case, the congestion controllers on the two paths could be playing a zero-sum game. If one increases its sending rate, the other one will experience congestion and will need to slow down.

The counter-productive effects of this kind of shared path competition can be mitigated by “coupled congestion control” algorithms. The idea is to detect that multiple paths are using the same bottleneck, for example by noticing that congestion signals like packet losses, ECN marks or delay increases are happening simultaneously on these paths. When that happens, it might be wise for the congestion managers on each path to cooperate, for example by increasing sending rates slower than if they were alone.

The experimental algorithm proposed in RFC6356 is an example of that approach. It is a variation of the New Reno algorithm. Instead of always increasing the congestion window for a flow by “one packet per RTT per path”, it would use the minimum of that and something like “one packet per RTT across all paths”. (The actual formula is more complex, see the RFC text for details.)

I have two issues with that: it seems a bit too conservative, and in any case it will only be efficient on those paths where new Reno is adequate. Most of the Internet connections are using more modern congestion algorithms than new Reno, such as Cubic or BBR. We would have to develop an equivalent coupling algorithm for the these algorithms. But we can also notice that the formula will slow the congestion window increase even if the paths are not coupled, which is clearly not what selfish users would want. In short, this is still a research issue, which explains why the QUIC multipath draft does not mandate any particular solution.

Another issue with multipath is the “simultaneous backup” problem. Many multipath configurations are aiming for redundancy rather than load sharing. They will maintain an active path and a backup path, sending most of the traffic on the active path, and only switching to the backup path if they detect an issue. For example, they would use a Wi-Fi connection until it breaks, and then automatically start sending data on a 5G connection. The problem happens when multiple connections on multiple devices do that at the same time. They were all using the same Wi-Fi network, they all detect an issue are about the same time, so they all switch to using the 5G network around the same time. That’s the classic “thundering herd” problem – an instant surge of traffic causing immediate congestion as all these connections compete for the same 5G radio frequencies.

The “thundering herd” problem will solve itself eventually, as all connections notice the congestion and reduce their sending rate, but it would be nice if we avoided the packet losses and increased delays when these “simultaneous backups” happen. The classic solutions are to introduce random delays before backing up, and also to probe bandwidth cautiously after a backup. Again, this is largely a research issue. My main recommendation would be for networks to implement something like L4S (see RFC9330, so each connection will receive “congestion experienced” ECN marks and quickly react.

So, yes, we do have a couple of research issues for congestion control and multipath…