23 Jan 2012

It has been about 10 months since the "Comodo Hacker" managed to get false PKI certificates from the Italian reseller of the Comodo certification authority. Then, by end of August or early September 2011, the same hacker managed to get another batch of forged PKI certificates by hacking the servers of Diginotar, a Dutch certification authority. In both cases, the forged certificates were used by the Iranian authorities to spoof servers like Gmail, presumably to spy on their opponents. The attack was stopped by cancelling the forged certificates, but it was a one-time reaction. As long as we do not fix the PKI standard or the way we use it in HTTPS and other secure connections, we are at risk. Yet, I see very little activity in that direction. The IETF is in charge of "transport level security," the SSL and TLS protocols used in HTTPS, but there is no particular initiative to correct it and prevent future attacks. So, here is my analysis and a couple of modest proposals.

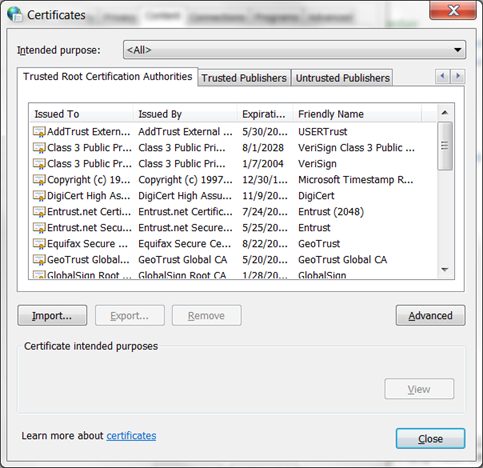

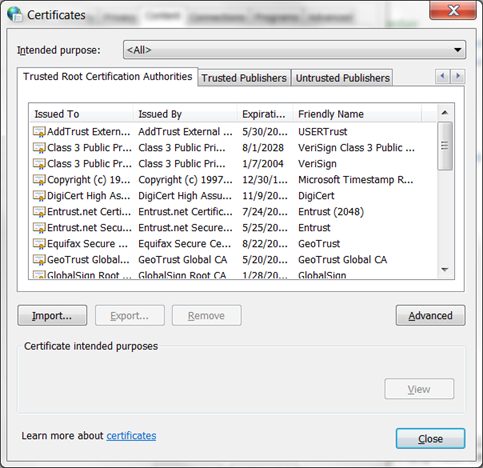

PKI stands for "Public Key Infrastructure," the standard that describes how web server can publish their public keys, which web clients then use to establish secure HTTP connections. The public keys are just large numbers that have no meaning by themselves, unless they are tied to the name of the server by a certificate, signed by a trusted "Certification Authority." Web browsers come loaded with the certificates of certification authorities, so these signatures can be verified. For example, in Internet Explorer, the list can be seen in the "Internet Options" under the "Content" tab, by clicking the "Certificate" button. The list looks like this picture:

To simplify, we can say that according to PKI all certificate authorities are equal. Any authority in the list can sign a certificate for Google, Facebook, Microsoft, or any other server. The list itself is long enough, but behind these "root" authorities are a set of resellers, also known as "intermediate authorities," who can present special certificates signed by one of the root authorities, and then get the right to sign certificates for any web site. These resellers too can sign certificates for Google, Facebook, Microsoft, or any other server.

The Comodo Hacker obtained forged certificates that allowed the Iranian authority to present, for example, a fake Gmail server. Some Iranian user would try to contact Gmail using the secure web protocol, HTTPS. The Iranian ISP, instead of sending the request directly to Google, would send it to an intermediate "spoofing" server, presumably managed by some kind of secret service. This server will show the forged credential, and the user will establish the connection. The user's browser will indicate that everything is fine and secure, and the HTTP connection will indeed be encrypted. But it will only be encrypted up to the spoofing server. That server will probably relay the connection to Gmail, so the user can read his mail. But everything the user reads or write can be seen and copied by the spoofing server: user name, password, email, list of friends. Moreover, once the password is known, the spies can come back and read more mail. The opponents believed they were secure, but the secret police could read their messages and understand their networks. The Gestapo would have loved that.

Of course, the companies who manage the certification authorities quickly reacted to the incident. The forged Comodo certificates were erased, and the Diginotar authority was decertified. Users were told to change their password. This particular attack was stopped. But stopping one attack does not eliminate the risk. There have been similar attacks in the past, when various "national firewalls" colluded with intermediate regional authorities to mount pretty much the same attack as the Iranians. In 2009, security researchers found ways to spoof certificates by exploiting a bug in Microsoft's security code. Of course, bugs were fixed and attacks were stopped, but the structural problem remains. In fact, there is a cottage industry of "SSL logging" products like this one from "Red Line Software". They sell these products to enterprises who really want to monitor what their employees do on the web, encrypted or not. The enterprise installs their own "trusted authority certificate" on the users' PC, and voila, SSL spoofing is enabled.

The PKI "certification chain" is the Achilles' heel of HTTPS, has been for a long time, and the Internet technologists are not doing much about it. OK, that includes me. I too should be doing something there. So here is a small proposal. In fact, here are three modest proposals. One is very easy to implement: protect the user's password, even when using HTTPS. The second is a bit harder: detect spoofing, and warn the user when someone else is listening on the connection. The third is the most difficult but also the most interesting: compute the encryption keys in a way that is impervious to SSL/TLS spoofing. All these proposals rely on the user's password, and assume that the first action done on the connection is the verification of that password.

Let's start by the simplest proposal, protecting the user password. Many web sites ask the user to provide a password. They ask that after the TLS connection is established. Since the connection is encrypted, they just send the password "in clear text," and thus reward any SSL/TLS spoof with a clear text copy of the user password. This is silly. And it is relatively easy to fix. The password dialogue is typically implemented in JavaScript. Instead of just using JavaScript to display a form and read the password, web site programmers should include some code to send the password securely. The simplest mechanism would be to use a challenge/response protocol: the server sends a random number, and the client answers with a cryptographic hash of the random number and the client password. The spoofing server will see a random string instead of the password. In theory, the spoof is defeated… but the practice is different, so please continue to the next paragraph.

OK, anyone who knows a bit of cryptography knows that challenge response protocols are susceptible to dictionary attack. In our example, the spoofing server will see the challenge and then see the response. They can try a set of candidate passwords from a dictionary, until they find a password that produces the target response when hashing the challenge. With modern computers, these attacks will retrieve the password within minutes. So, instead of challenge/response, one should use a secure password protocol such as SRP or DH-EKE. Both protocols use cryptography tricks to make sure that even if the password is weak, the authentication is strong. The idea is simple: plan for the worst case scenario, and protect the password as if it was exchanged on an unencrypted connection. This causes a bit of additional computation on the server, but provides assurance that even if the PKI certificate is spoofed, the password will be protected.

Let's look now at the slightly more complex proposal, detect the spoof. Detecting the attack is simple if the client already knows the authentic value of the server's certificate. In practice, that happens when the client uses a specialized application. For example, my copy of Outlook routinely detects the presence of SSL spoofing proxies, like those deployed by some enterprises. It does so because the client is configured with the "real" certificate of the mail server, and can immediately detect when something is amiss. Arguably, the simplest way to detect these spoofs would be to just keep copies of the valid certificates of the most used servers. But this simple solution may not always work. It fails if the certificate changes, or if the client is not properly configured. Knowing the server certificate in advance is good, but we may need a second protection for "defense in depth."

The secure password verification protocols, SRP and DH-EKE, can do more than merely verifying a password. They provide mutual authentication, and allow client and servers to negotiate a strong encryption key. If the authentication succeeds, the server has verified the client's identity, but the client has also verified that the server knew the password. At that point, the client knows that it is speaking to the right server, although there may be a "man-in-the-middle" spying on the exchange. Suppose now that we augment the password exchange with a certificate verification exchange. The server sends to the client the value of the real certificate, encrypted with the strong encryption key that was just negotiated: the client can decrypt it, detect any mismatch, and thus detect the "man in the middle." We could also let the client send to the server a copy of the certificate received during the SSL/TLS negotiation, allowing the server to detect the man in the middle and take whatever protective action is necessary. The users could be warned that "someone is spying on your connection," and the value of the attack would be greatly diminished.

Password protection and spoof detection that rely on scripts can be spoofed, and that is a big weakness. Both password protection and spoof detection will typically be implemented in JavaScript, and the scripts will be transmitted with the web page content. The attacker could rewrite the scripts and replace the protected password exchange with a clear text exchange. The client will then send the password in clear text to the spoofing server, which will execute the protected exchanges with the real server. The protection could be defeated. This code spoofing attack is quite hard to prevent without some secure way to installing code on the client. This would lead to a cat-and-mouse game, in which the servers keep obfuscating the code in the web page to prevent detection and replacement by the spoof, while the spies keep analyzing the new versions of the web pages to make sure that they can keep intercepting exchanges. Arguably, the cat and mouse game is better than nothing. In any case, it will greatly increase the cost of managing spoofing servers. But we want a better solution.

We could solve the problem by installing dedicated software on the client's machine. Instead of using a web browser to access Gmail or Facebook, the client would use an app. The application may include a copy of the real certificate of the server, and will ensure that the connection is properly secured. The problem with that solution is scale. To stay secure, we would have to install an app for each of the websites that we may visit, and the web sites will have to develop an app for each of the platforms that their clients may use. This is a tall order. It might work for very big services like Facebook, but it will be very difficult for small websites

My more complex proposal is to redefine the TLS protocol, to defend against certificate spoofing. In the most used TLS mechanism, the server sends a certificate to the client. The client picks a random number to be used as encryption key, encrypts this number with the public key in the certificate, and sends the encrypted value to the server. The security of that mechanism relies entirely on the security of the server's certificate. If we want a mechanism that resists spoofing of the server certificate, we need an alternative.

There is a variant of TLS authentication that relies only on a secure password exchange. SRP TLS uses the extension mechanisms defined in the TLS 1.2 framework to implement mutual authentication and key negotiation using the strong password verification protocol, SRP. An extension to the client "Hello" protocol carries the client name, while the "Server Key Exchange" and "Client Key Exchange" carry the encrypted values defined in the SRP algorithm. Compared to classic TLS, SRP-TLS has the advantage of not requiring a PKI certificate, and thus being impervious to PKI spoofing. It has the perceived disadvantage of relying only on passwords for security, exposing a security hole whenever the password is compromised or guessed, arguably a more frequent event than certificate spoofing. It also has the disadvantage of sending the client identity in clear text; this is hard to avoid has the server needs to build the key exchange data using its copy of the client's password, and thus needs to know the client's identity before any exchange. SRP-TLS is not yet used much, and is not implemented in the Microsoft security suites that are used by major web browsers.

As we embrace the challenge of building a spoofing resistant exchange, we probably do not want to give up the strength of public key cryptography. Instead of just replacing the certificate by a password, we probably want to combine certificate and password. We also do not want to make the user identity public, at least when the server certificate is not spoofed. The main problem is that we have to insert a new message in the TLS initial handshake, to send the identity of the client encrypted with the server's public key. This extra message changes the protocol specification, essentially from a single exchange (server certificate and client key) to a double exchange (identity request and client identity response, followed by the exchange of keys). The principle is simple, but the execution requires updating a key Internet standard, and that can take time.

While we wait for standards to be updated, we should apply some immediate defense in depth. Protect the password exchange instead of sending it in the clear, and if possible add code to detect a man-in-the-middle attack. For the web services that can afford it, develop applications that embed a copy of the sites' certificates and some rigorous checks. There is no reason to reward attackers who spoof security certificates.